For Jobs Data, Textkernel’s real-time database for labor market analysis, we crawl the web for online job ads. Of course, ensuring the high quality of this database raises many technical challenges. One of them is the detecting duplicates of job ads. In this blog post we explain how Textkernel solves this problem.

The average online job posting has many duplicates

When you work with large volumes of web data, sooner or later you encounter the problem of duplicate content. Many web pages are not unique: a Google paper suggests that around 30% of web pages are near-duplicates.

In Jobs Data, where we spider online job ads in eight countries, this issue is even more prominent: we found out that an average job ad is reposted 2 to 5 times (depending on the country), which makes the fraction of duplicates as high as 50–80%. This is not surprising: companies advertise their jobs as widely as possible to attract more good applicants: on corporate sites, on general and specialized job boards, via staffing agencies, etc. This makes identifying and grouping duplicate ads an essential step in our data processing.

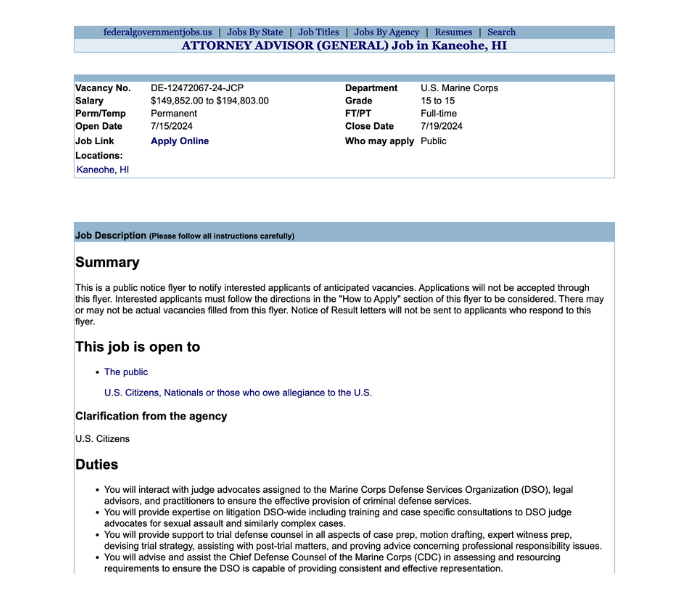

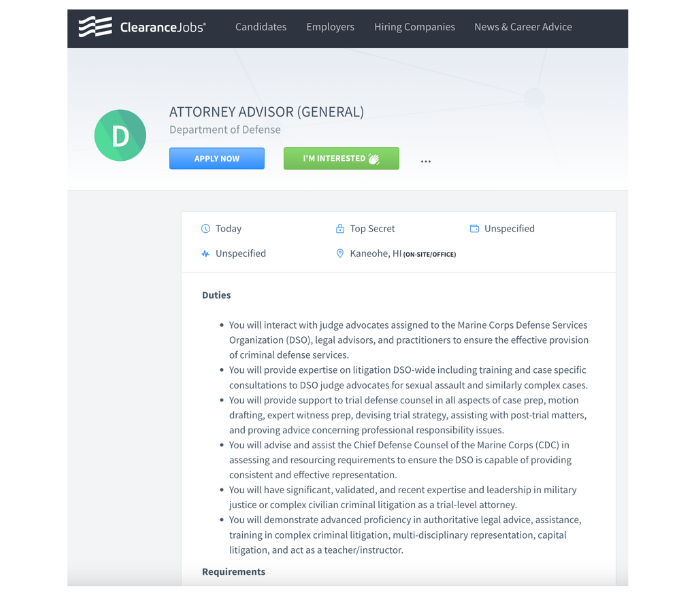

Near-duplicate jobs ads are rarely identical: when posting an ad on a different site, text is often restructured, shortened or extended and metadata is often changed to match the site’s data model. Consider two postings of the the same job shown below:

Textual similarity

Efficient methods for finding similar web pages are almost as old as the web itself: a seminal paper Syntactic clustering of the web by A. Broder et al. appeared in 1997. In Jobs Data we use a classic approach based on shingling and min-wise permutations.

As we show below, a relatively simple implementation can easily deal with tens of millions of documents. For datasets with billions of documents, a more sophisticated implementation and/or a different method is needed: see the blog post Near-Duplicate Detection for an overview and the paper Detecting Near-Duplicates for Web Crawling for details. With shingling, we convert every text document into a set of all sequences (shingles) of consecutive words as they occur in the document.

For example, if we consider 6-word sequences for the text “Well established and respected Law Office in Downtown Bakersfield is in need of a temporary Legal Assistant …”, the shingles will include:

– “Well established and respected Law Office”

– “established and respected Law Office in”

– “and respected Law Office in Downtown” etc.

We can measure textual similarity between two documents as the overlap between their sets of shingles. For our example “Legal secretary” ads, the two documents share 179 out of total 484 shingles between them, which gives us the similarity of 37%. It may seem low, given that the job descriptions are nearly identical: it is mainly due to the different formatting of metadata (location, company name, phone) and missing text on equal opportunity employment. It is clear that for job ads, using a simple similarity threshold to decide whether two ads are duplicates would not be sufficient.

Finding similar documents efficiently

To avoid storing and comparing hundreds of shingles for every document, we apply a locality sensitive hashing scheme as follows. We first map each shingle of a document to a 64-bit integer using a good hash function. Let’s call these integers shingle hashes. We take a fixed random integer permutation, apply it to all shingle hashes from the document and take the smallest value – this is the first value we will store. We repeat this M times with different fixed random permutations, to get a list of M integers: we call this the sketch of the document. It turns out that in order to estimate similarity of two documents we only need to count the number of matching integers in their sketches. This means we only need to store M integers for every document. By selecting appropriate value for M we can find a good trade-off between the accuracy of the estimation and the storage requirements.

How do we store document sketches for quick lookup of similar documents? We use a common technique: an inverted index. We compute sketches for all documents in our dataset, and for every integer we observe, we store a list of documents whose sketches contain this integer. To find similar documents for a new input, we only need to merge document lists for the M integers in its sketch. Time- and memory-efficient management of such indices is what Elasticsearch is very good at, so we use it to index our sketches and retrieve possible near-duplicates. We update the index continuously as new documents are spidered in Jobs Data.

Specifics for job ad deduplications

As discussed above, we use shingling, min-wise permutation hashing and inverted indexing to find job ads textually similar to a new input document. For general purpose near-duplicate detection, this is often all that is needed: a simple threshold on text similarity between the input and the candidates is enough to decide whether the input is a duplicate. For job ads such threshold-based approach is not sufficient: as we have seen in our example, true duplicates can have similarity as low as 37%.

In Jobs Data, we complement text-based retrieval with a few more tricks:

– We apply machine learning and rule-based techniques to remove irrelevant content (boilerplate text, navigation, banners, ads, etc.)

– We use our semantic parser trained specifically for job ads to identify text sections containing job descriptions and candidate requirements (as opposed to the general description of the advertising company, which is often repeated on many unrelated job ads of the company)

– For every new job ad, we use shingling as described above to find candidates with substantial text overlap.

– We train a classifier to predict whether a given input and a retrieved candidate are indeed postings of the same job. The classifier relies on the text overlap and the match between other properties of the postings, as extracted by our semantic parser: organization name, profession category, contact information, etc.

Conclusion

Textkernel’s deduplication system for job ads effectively handles the challenge of near-duplicate detection using techniques like shingling, min-wise permutation hashing, inverted indexing, and machine learning classifiers. This multi-faceted approach ensures high precision in identifying duplicates while minimizing false positives. Continuous evaluation and refinement of our methods guarantee that our Jobs Data database remains accurate and up-to-date.

Some useful links

– xxhash: a good hashing library for Python

– Integer hash functions: mixing reversible hash functions by Thomas Wang that can be used to construct pseudo-random permutations